准备工作

在所有主机执行以下工作。

配置主机

修改主机名称

1 | $ hostnamectl --static set-hostname k8s-master |

配 hosts

1 | $ echo "172.31.21.226 k8s-master |

关防火墙和 selinux

1 | $ systemctl stop firewalld && systemctl disable firewalld |

1 | $ echo "net.bridge.bridge-nf-call-ip6tables = 1 |

关闭 swap

1 | $ swapoff -a |

永久关闭,注释 swap 相关内容1

vim /etc/fstab

下载离线安装包

k8s 最新的版本需要 FQ 下载。

1 | $ wget https://packages.cloud.google.com/yum/pool/aeaad1e283c54876b759a089f152228d7cd4c049f271125c23623995b8e76f96-kubeadm-1.8.4-0.x86_64.rpm |

安装 docker

在所有主机执行以下工作。

kubernetes 1.8.4 目前支持 Docker 17.03。

添加阿里源

1 | $ yum-config-manager --add-repo <http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo> |

安装指定 Docker 版本

1 | $ yum install -y --setopt=obsoletes=0 \ |

配置 Docker 加速器

1 | $ sudo mkdir -p /etc/docker |

安装 k8s

在所有主机执行以下工作。

启动 kubelet

1 | $ yum -y localinstall *.rpm |

这时 kubelet 应该还在报错,不用管它。1

$ journalctl -u kubelet --no-pager

准备 Docker 镜像

1 | gcr.io/google_containers/kube-apiserver-amd64 v1.8.4 |

可以使用这个脚本拉取到本地。

配置 k8s 集群

master 初始化

1 | kubeadm init --apiserver-advertise-address=172.31.21.226 --kubernetes-version=v1.8.4 --pod-network-cidr=10.244.0.0/16 |

配置用户使用 kubectl 访问集群1

2

3$ mkdir -p $HOME/.kube && \

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config && \

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看一下集群状态1

2

3

4

5

6

7

8

9

10

11

12

13

14$ kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system etcd-k8s-master 1/1 Running 0 1m 172.31.21.226 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 1m 172.31.21.226 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 1m 172.31.21.226 k8s-master

kube-system kube-dns-545bc4bfd4-84pjx 0/3 Pending 0 2m <none> <none>

kube-system kube-proxy-7d2tc 1/1 Running 0 2m 172.31.21.226 k8s-master

kube-system kube-scheduler-k8s-master 1/1 Running 0 1m 172.31.21.226 k8s-master

$ kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true”}

安装Pod Network1

$ kubectl apply -f https://raw.githubusercontent.com/batizhao/dockerfile/master/k8s/flannel/kube-flannel.yml

这时再执行 kubectl get pod –all-namespaces -o wide 应该可以看到 kube-dns-545bc4bfd4-84pjx 已经变成 Running。如果遇到问题可能使用以下命令查看:

1 | $ kubectl -n kube-system describe pod kube-dns-545bc4bfd4-84pjx |

node 加入集群

在 node 节点分别执行

1 | $ kubeadm join --token d87240.989b8aa6b0039283 172.31.21.226:6443 --discovery-token-ca-cert-hash sha256:4c2b5469ddc4f49ba15f3146bea5bf9ba8f67f68bdc9ef1ff6cb026d39b94dea |

如果需要从其它任意主机控制集群1

2

3

4$ mkdir -p $HOME/.kube

$ scp root@172.31.21.226:/etc/kubernetes/admin.conf $HOME/.kube/config

$ chown $(id -u):$(id -g) $HOME/.kube/config

$ kubectl get nodes

在 master 确认所有节点 ready

1 | $ kubectl get nodes |

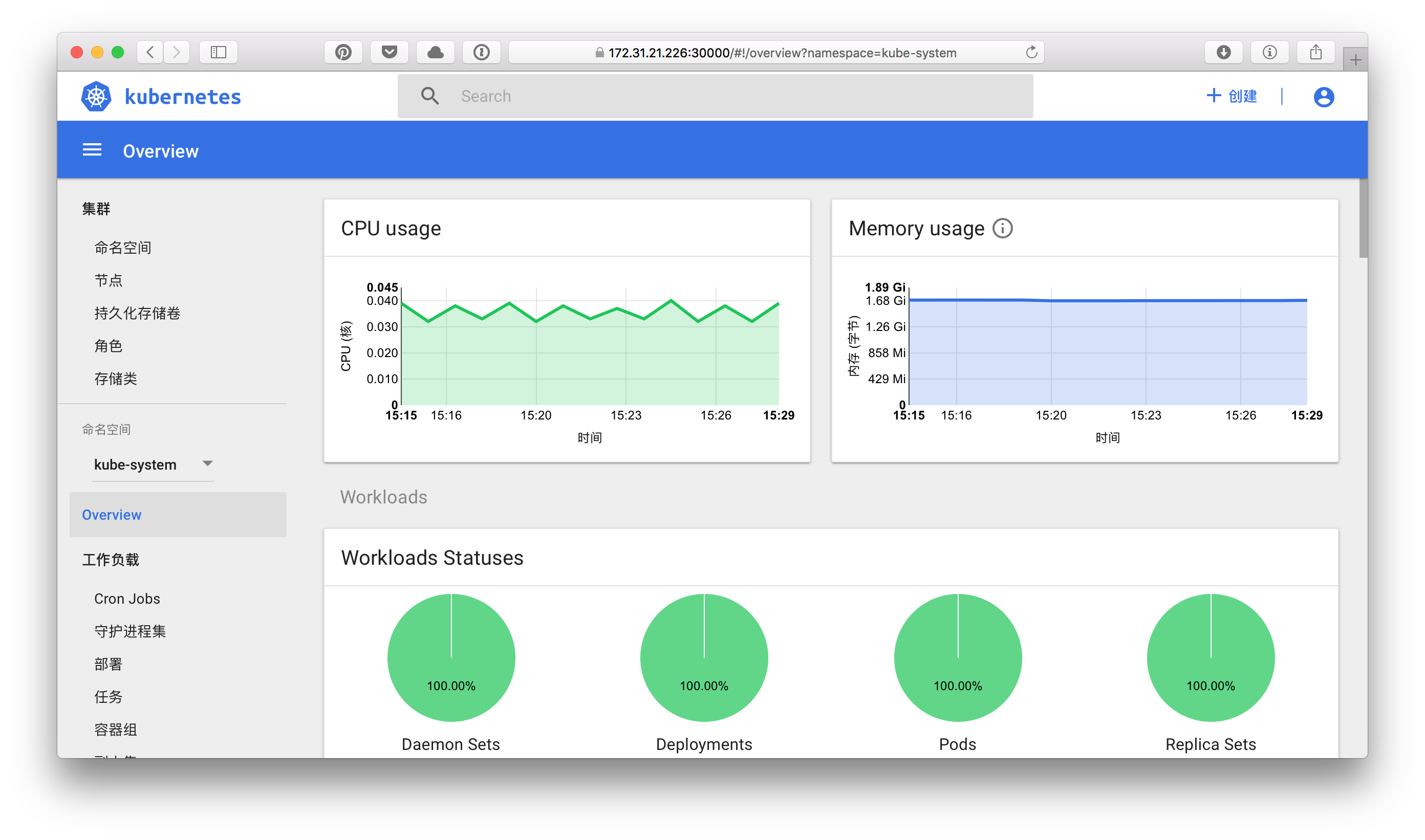

安装 dashboard

准备 Docker 镜像

1 | gcr.io/google_containers/kubernetes-dashboard-amd64 v1.8.0 |

可以使用这个脚本拉取到本地。

初始化

1 | $ kubectl apply -f https://raw.githubusercontent.com/batizhao/dockerfile/master/k8s/kubernetes-dashboard/kubernetes-dashboard.yaml |

确认 dashboard 状态1

2$ kubectl get pod --all-namespaces -o wide

kube-system kubernetes-dashboard-7486b894c6-2l4c5 1/1 Running 0 17s 10.244.1.3 k8s-node-1

访问

或者在任意主机执行(比如我的 Mac)

1 | $ kubectl proxy |

访问:http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

查看登录 token

1 | $ kubectl -n kube-system get secret | grep kubernetes-dashboard-admin |

安装 heapster

准备 Docker 镜像

1 | gcr.io/google_containers/heapster-amd64:v1.4.0 |

可以使用这个脚本拉取到本地。

初始化

1 | $ kubectl apply -f https://raw.githubusercontent.com/batizhao/dockerfile/master/k8s/heapster/heapster-rbac.yaml |